The recent release of the Jetson Nano is an inexpensive alternative to Jetson TX1:

| Platform | CPU | GPU | Memory | Storage | MSRP |

|---|---|---|---|---|---|

| Jetson TX1 (Tegra X1) | 4x ARM Cortex A57 @ 1.73 GHz | 256x Maxwell @ 998 MHz (1 TFLOP) | 4GB LPDDR4 (25.6 GB/s) | 16 GB eMMC | $499 |

| Jetson Nano | 4x ARM Cortex A57 @ 1.43 GHz | 128x Maxwell @ 921 MHz (472 GFLOPS) | 4GB LPDDR4 (25.6 GB/s) | Micro SD | $99 |

Basically, for 1/5 the price you get 1/2 the GPU. Detailed comparison of the entire Jetson line.

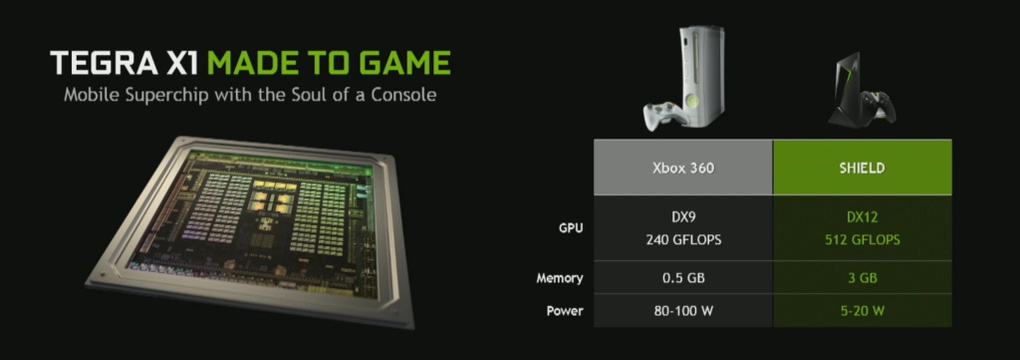

The X1 being the SoC that debuted in 2015 with the Nvidia Shield TV:

Fun Fact: During the GDC annoucement when Jensen and Cevat “play” Crysis 3 together their gamepads aren’t connected to anything. Seth W. from Nvidia (Jensen) and I (Cevat) are playing backstage. “Pay no attention to that man behind the curtain!”

Mini-Rant: Memory Bandwidth

The memory bandwidth of 25.6 GB/s is a little disappointing. We did some work with K1 and X1 hardware and memory ended up the bottleneck. It’s “conveniently” left out of the above table, but Xbox 360 eDRAM/eDRAM-to-main/main memory bandwidth is 256/32/22.4 GB/s.

Put another way, the TX1’s GPU hits 1 TFLOP while the original Xbox One GPU is 1.31 TFLOPS with main memory bandwidth of 68.3 GB/s (also ESRAM with over 100 GB/s, fugged-about-it). So, Xbone is 30% higher performance but has almost 2.7x the memory bandwidth.

When I heard the Nintendo Switch was using a “customized” X1, I assumed the customization involved a new memory solution. Nope. Same LPDDR4 that (imho) would be a better fit for a GPU with 1/4-1/2 the performance. We haven’t done any Switch development, but I wouldn’t be surprised if many titles are bottle-necked on memory. The next most likely culprit being the CPU if overly-dependent on 1-2 threads- but never the GPU.

Looks like we have to hold out until the TX2 to get “big boy pants”. It’s 1.3 TFLOPS with 58.3 GB/s of bandwidth (almost 2.3x the X1).

Installation

Follow the official directions. On Mac:

# For the SD card in /dev/disk2

sudo diskutil partitionDisk /dev/disk2 1 GPT "Free Space" "%noformat%" 100%

unzip -p ~/Downloads/nv-jetson-nano-sd-card-image-r32.3.1.zip | sudo dd of=/dev/rdisk2 bs=1m

# Wait 10-20 minutes

The OS install itself is over 9 GB and the Nvidia demos are quite large such that a 16 GB SD card fills up quick. We recommend at least 32 GB SD card.

Boots into Nvidia customized Ubuntu 18.04.

Intial Shell Access

Connecting a keyboard/display directly to the Nano is the easiest way to get started.

It is also possible to do a “headless” install:

- Place a jumper on J48

- Connect a 5V DC power adapter to the DC barrel jack (5.5mm/2.1mm)

- Connect a USB cable between host PC and Nano’s micro USB (make sure the cable has data wires)

- On the host PC, identify the serial device connected to the Nano

- Mac:

/dev/tty.usbmodem* - Linux:

/dev/ttyACM*

- Mac:

- Connect a terminal emulator like

screento the serial device baud rate 115200 (see screen usage):screen /dev/tty.usbmodem0123456789 115200

Then, just follow the prompts.

If you’re using a Mac running Catalina (10.15) there’s some complications.

Install other software to taste, remember to grab the arm64/aarch64 version of binaries instead of arm/arm32.

Benchmarks

For our Raven Ridge-like APU with Vega GPU we run a series of benchmarks:

- 3DMARK, PCMARK, Cinebench

- Unigine (Heaven, Valley)

- Several games that have benchmark/demo modes (e.g. “Rise of the Tomb Raider”, “Shadow of Mordor”, etc.)

- Claymore/Phoenix Miner

- A few others

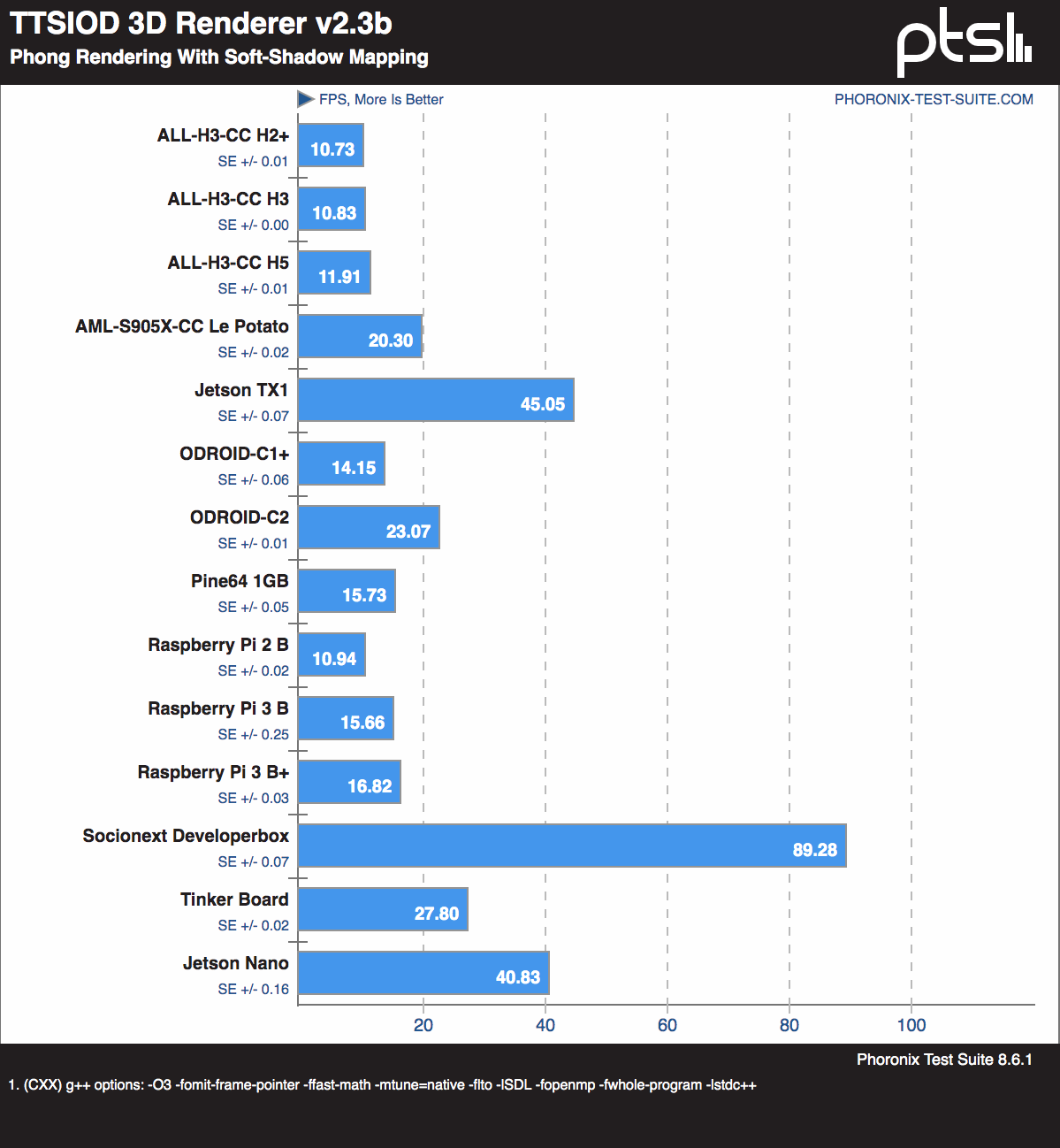

But they’re either limited to Windows and/or x86. Seems the de-facto standard for ARM platforms might be the Phoronix Test Suite. Fall 2018, Phoronix did a comparison of a bunch of single-board computers that’s not exactly surprising but still interesting.

Purely for amusement also throwing in the results for the Raspberry Pi Zero. Which, to be fair, is in a completely different device class and target use-case.

| Test | Pi Zero | Pi 3 B | Nano | Notes |

|---|---|---|---|---|

| glxgears | 107 | 560 | 2350 | FPS. Zero using “Full KMS”, when not using only manages 7.7 FPS. 3B using “Fake KMS”, “Full KMS” caused display to stop working. |

| glmark2 | 399 | 383 | 1996 | On the Pi’s several tests failed to run |

| build runng | 3960 | 135 | 68 | Seconds. cargo clean; time cargo build runng like we did on the Pi Zero |

Phoronix Test Suite

PTS is pretty nice. It provides an easy way to (re-)run a set of benchmarks based on a unique identifier. For example, to run the tests from the Fall 2018 ARM article:

sudo apt-get install -y php-cli php-xml

# Download PTS somewhere and run/compare against article

phoronix-test-suite benchmark 1809111-RA-ARMLINUX005

# Wait a few hours...

# Results are placed in ~/.phoronix-test-suite/test-results/

| Test | Pi Zero | Pi 3 B | Nano | TX1 | Notes |

|---|---|---|---|---|---|

| Tinymembench (memcpy) | 291 | 1297 | 3504 | 3862 | |

| TTSIOD 3D Renderer | 15.66 | 40.83 | 45.05 | ||

| 7-Zip Compression | 205 | 1863 | 3996 | 4526 | |

| C-Ray | 2357 | 943 | 851 | Seconds (lower is better) | |

| Primesieve | 1543 | 466 | 401 | Seconds (lower is better) | |

| AOBench | 778 | 333 | 190 | 165 | Seconds (lower is better) |

| FLAC Audio Encoding | 971.18 | 387.09 | 103.57 | 78.86 | Seconds (lower is better) |

| LAME MP3 Encoding | 780 | 352.66 | 143.82 | 113.14 | Seconds (lower is better) |

| Perl (Pod2html) | 5.3830 | 1.2945 | 0.7154 | 0.6007 | Seconds (lower is better) |

| PostgreSQL (Read Only) | 6640 | 12410 | 16079 | ||

| Redis (GET) | 34567 | 213067 | 568431 | 484688 | |

| PyBench | 76419 | 24349 | 7030 | 6348 | ms (lower is better) |

| Scikit-Learn | 844 | 496 | 434 | Seconds (lower is better) |

The “Pi 3 B” and “TX1” columns are reproduced from the OpenBenchmarking.org results. There’s also an older set of benchmarks, 1703199-RI-ARMYARM4104.

Check out the graphs (woo hoo!):

These all seem to be predominantly CPU benchmarks where the TX1 predictably bests the Nano by 10-20% owing to its 20% higher CPU clock.

Don’t let the name “TTSIOD 3D Renderer” fool you, it’s a software renderer (i.e. non-hardware-accelerated; no GPUs were harmed by that test). Further evidenced by the “Socionext Developerbox” showing. Socionext isn’t some new, up-and-coming GPU company, that device has a 24 core ARM Cortex A53 @ 1 GHz (yes, 24- that’s not a typo).

There’s more results for the Nano including things like Nvidia TensorRT and temperature monitoring both with and without a fan. But, GLmark2 is likely one of the only things that will run everywhere.

glxgears

On Nano:

# Need to disable vsync for Nvidia hardware

__GL_SYNC_TO_VBLANK=0 glxgears

On Pi:

sudo raspi-config

Advanced Options > GL Driver > GL (Full KMS) > Ok adds dtoverlay=vc4-kms-v3d to the bottom of /boot/config.txt. Reboot and run glxgears.

GLmark2

Getting GLmark2 working on the Nano is easy:

sudo apt-get install -y glmark2

On Pi, it’s currently broken.

You can use the commit right after Pi3 support was merged:

sudo apt-get install -y libpng-dev libjpeg-dev

git clone https://github.com/glmark2/glmark2.git

cd glmark2

git checkout 55150cfd2903f9435648a16e6da9427d99c059b4

There’s a build error:

../src/gl-state-egl.cpp: In member function ‘bool GLStateEGL::gotValidDisplay()’:

../src/gl-state-egl.cpp:448:17: error: ‘GLMARK2_NATIVE_EGL_DISPLAY_ENUM’ was not declared in this scope

GLMARK2_NATIVE_EGL_DISPLAY_ENUM, native_display_, NULL);

^~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

In src/gl-state-egl.cpp at line 427 add:

#else

// Platforms not in the above platform enums fall back to eglGetDisplay.

#define GLMARK2_NATIVE_EGL_DISPLAY_ENUM 0

Build everything and run it:

# `dispmanx-glesv2` is for the Pi

./waf configure --with-flavors=dispmanx-glesv2

./waf

sudo ./waf install

glmark2-es2-dispmanx --fullscreen

If it fails with failed to add service: already-in-use? take a look at:

Both mention commenting out dtoverlay=vc4-kms-v3d in /boot/config.txt- which was added when we enabled “GL (Full KMS)”.

Hello AI World

After getting your system setup, take a look at “Hello AI World” which does image recognition and is pre-trained with 1000 objects. Start with “Building the Repo from Source”. It took a while to install dependencies, but then everything builds pretty quick.

cd jetson-inference/build/aarch64/bin

# Recognize what's in orange_0.jpg and place results in output.jpg

./imagenet-console orange_0.jpg output.jpg

# If you have a camera attached via CSI (e.g. Raspberry Pi Camera v2)

./imagenet-camera googlenet # or `alexnet`